Abstract: How do weapons inspections alter international bargaining environments? While conventional wisdom focuses on informational aspects, this paper focuses on inspections’ impact on the cost of a potential program–weapons inspectors shut down the most efficient avenues to development, forcing rising states to pursue more costly means to develop arms. To demonstrate the corresponding positive effects, this paper develops a model of negotiating over hidden weapons programs in the shadow of preventive war. If the cost of arms is large, efficient agreements are credible even if declining states cannot observe violations. However, if the cost is small, a commitment problem leads to positive probability of preventive war and costly weapons investment. Equilibrium welfare under this second outcome is mutually inferior to the equilibrium welfare of the first outcome. Consequently, both rising states and declining states benefit from weapons inspections even if those inspections cannot reveal all private information.

If you are here for the long haul, you can download the chapter on the purpose of weapons inspections here. Being that it is a later chapter from my dissertation, here is a quick version of the basic “butter-for-bombs” model:

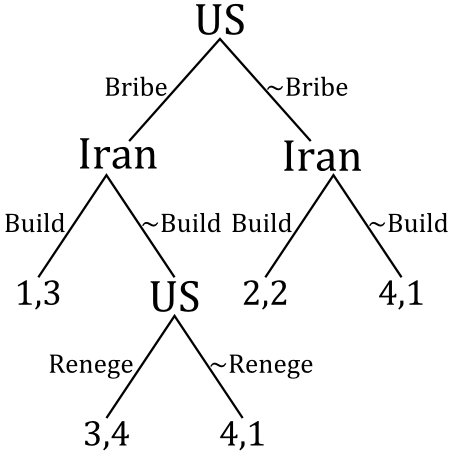

Imagine a two period game between R(ising state) and D(declining state). In the first period, D makes an offer x to R, to which R responds by accepting, rejecting, or building weapons. Accepting locks in the proposal; R receives x and D receives 1-x for the rest of time. Rejecting locks in war payoffs; R receives p – c_R and D receives 1 – p – c_D. Building requires a cost k > 0. D responds by either preventing–locking in the war payoffs from before–or advancing to the post-shift state of the world.

In the post-shift state, D makes a second offer y to R, which R accepts or rejects. Accepting locks in the offer for the rest of time. Rejecting leads to war payoffs; R receives p’ – c_R and D receives 1 – p’ – c_D, where p’ > p. Thus, R fares better in war post-shift and D fares worse.

As usual, the actors share a common discount factor δ.

The main question is whether D can buy off R. Perhaps surprisingly, the answer is yes, and easily so. To see why, note that even if R builds, it only receives a larger portion of the pie in the later stage. Specifically, D must offer p’ – c_R to appease R and will do so, since provoking war leads to unnecessary destruction. Thus, if R ever builds, it receives p’ – c_R for the rest of time.

Now consider R’s decision whether to build in the first period. Let’s ignore the reject option, as D will never be silly enough to offer an amount that leads to unnecessary war. If R accepts x, it receives x for the rest of time. If it builds (and D does not prevent), then R pays the cost k and receives x today and p’ – c_R for the rest of time. Thus, R is willing to forgo building if:

x ≥ (1 – δ)x + δ(p’ – c_R) – (1 – δ)k

Solving for x yields:

x ≥ p’ – c_R – (1 – δ)k/δ

It’s a simple as that. As long as D offers at least p’ – c_R – (1 – δ)k/δ, R accepts. There is no need to build if you are already getting all of the concessions you seek. Meanwhile, D happily bribes R in this manner, as it gets to steal the surplus created by R not wasting the investment cost k.

The chapter looks at the same situation but with imperfect information–the declining state does not know whether the rising state built when it chooses whether to prevent. Things get a little hairy, but the states can still hammer out agreements most of the time.

I hope you enjoy the chapter. Feel free to shoot me a cold email with any comments you might have.